3 XAI Methods SHAP, LIME, and Grad-CAM are transforming clinical AI from mysterious black boxes into transparent decision-support tools. Essential reading for healthcare professionals.

Explainable AI: Unveiling the Black Box

An AI system alerts a radiologist by detecting a suspicious lesion on a medical scan, with 94% certainty. While this algorithm has outperformed leading experts in clinical trials, a crucial question remains: why did it flag this image? What specific features or patterns triggered this alert?

Without a clear explanation, even the most powerful AI resembles a digital crystal ball—impressive indeed, but unsuitable for life-and-death decisions. We face the fundamental paradox of AI in healthcare: we possess tools capable of diagnosing with unmatched precision, yet their reasoning remains almost entirely opaque.

This is precisely the challenge that Explainable AI (XAI) strives to solve.

The Black Box Dilemma

In the field of AI, a black box refers to a complex system—such as a deep neural network—whose internal workings escape human understanding. In our medical example, the AI simply announces: “There’s a suspicious lesion here, with 94% certainty.” But a doctor needs far more than a simple percentage. They must understand what the AI “saw”: a particular texture? A specific shape? An abnormal density?

Faced with this opacity, the radiologist finds themselves in an uncomfortable position. They can either blindly accept the recommendation by trusting the machine without being able to verify its reasoning, or reject it at the risk of forgoing a tool potentially more accurate than the human eye.

This lack of transparency raises major concerns. First, the risk of bias and errors: if the AI was trained on flawed or biased data, its decisions may prove unjust or incorrect—biases impossible to detect without explanation. Second, the question of trust: how can healthcare professionals, patients, and regulators trust tools they don’t understand, especially when lives are at stake? Finally, the problem of accountability: in case of diagnostic error, who is responsible? The radiologist who followed the recommendation? The algorithm’s designer? The hospital that implemented it?

Toward Transparency: Explainable AI

Explainable AI (XAI) directly addresses this dilemma. Its objective: to create AI models whose decisions are understandable to humans, effectively transforming the “black box” into a “glass box.”

Far from replacing radiologists, XAI aims to assist them by becoming a true diagnostic partner. Thanks to the explanations provided, doctors can now understand the AI’s reasoning and validate its relevance, detect potential biases or errors in the model, and even learn by discovering patterns the human eye might have missed.

The radiologist example perfectly illustrates why XAI is not a luxury, but an absolute necessity for the ethical and responsible adoption of AI—particularly in sectors where human lives are at stake.

Today, we’ll dissect three game-changing XAI methods that are transforming clinical decision-making: SHAP, LIME, and Grad-CAM. But here’s the controversial truth most won’t tell you: while these tools promise transparency, they’re also creating new forms of dangerous over-confidence among clinicians. Let’s explore why understanding these methods isn’t just academically interesting—it’s becoming a matter of regulatory compliance and patient safety.

The AI Revolution Meets Clinical Reality: A Perfect Storm

Healthcare AI has reached an inflection point. The FDA has approved over 500 AI-enabled medical devices since 2019, while the European Medicines Agency (EMA) is drafting comprehensive AI regulations that will reshape how we deploy these technologies. Yet beneath this regulatory momentum lies a fundamental problem: most AI systems operate as impenetrable black boxes, making decisions through complex neural networks that even their creators cannot fully explain.

This opacity isn’t merely inconvenient—it’s dangerous. When a cardiologist relies on AI to interpret ECGs, they need more than a probability score. They require insight into which waveform characteristics influenced the diagnosis, whether the model focused on relevant cardiac markers, and how confident they should be in the recommendation. Without this understanding, AI becomes a sophisticated guessing game rather than a precision medicine tool.

The stakes couldn’t be higher. Medical errors already constitute the third leading cause of death in healthcare systems worldwide. Adding unexplainable AI into this equation without proper transparency mechanisms could amplify rather than reduce diagnostic mistakes. This is where XAI methods become not just helpful, but essential for responsible AI deployment in clinical settings.

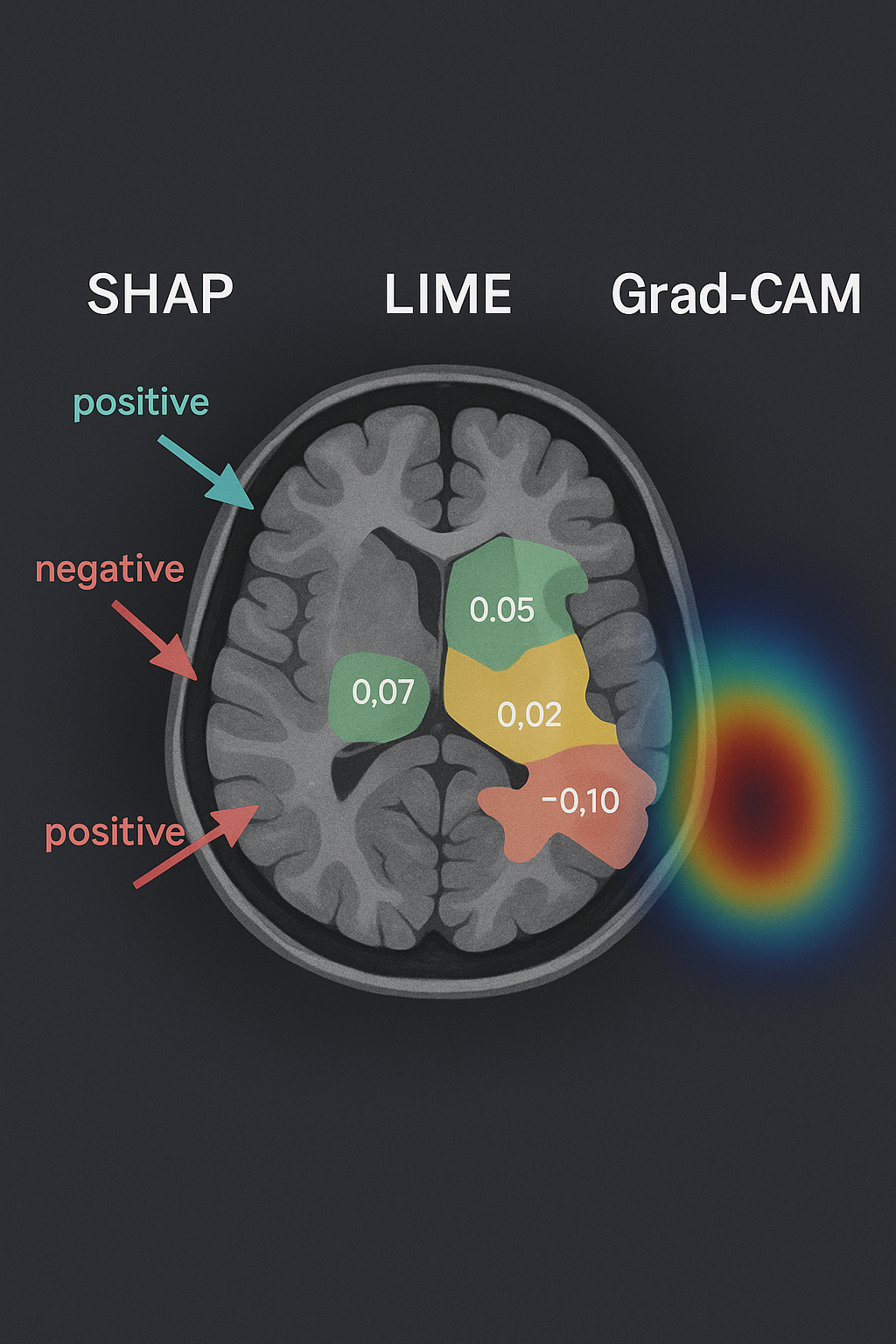

Unveiling AI’s Diagnostic Reasoning. These visualizations illustrate how Explainable AI (XAI) converts opaque algorithmic decisions into comprehensible medical insights. By applying SHAP, LIME, and Grad-CAM methodologies to MRI scans, the system pinpoints and visualizes the critical brain regions that shaped its diagnostic conclusion. This level of transparency empowers clinicians with a deeper understanding of the AI’s analytical process, fostering trust and informed decision-making in patient care.

SHAP: The Mathematical Powerhouse with a Transparency Problem

SHAP (SHapley Additive exPlanations) represents the most mathematically rigorous approach to AI explanation, built on game theory principles developed by Nobel laureate Lloyd Shapley. The method calculates how much each input feature contributes to a model’s final prediction, distributing “credit” for the decision across all variables in a mathematically fair way.

Think of SHAP as a forensic accountant for AI decisions. When analyzing a chest X-ray for pneumonia, SHAP can quantify that lung opacity contributed +0.23 to the pneumonia score, while heart size contributed -0.05, and patient age added +0.12. These Shapley values always sum to the difference between the model’s prediction and its baseline, ensuring complete mathematical consistency.

This axiomatically sound approach makes SHAP incredibly appealing for regulatory compliance. The method satisfies four crucial mathematical properties: efficiency, symmetry, dummy feature elimination, and additivity. For healthcare organizations facing increasing scrutiny from regulators, SHAP offers mathematical proof that their AI explanations are theoretically sound.

However, SHAP’s theoretical elegance masks practical limitations that could prove dangerous in clinical settings. Recent meta-analyses reveal that SHAP achieves only 38% fidelity in medical imaging tasks, with confidence intervals spanning 0.35-0.41. This means SHAP explanations accurately reflect the model’s actual decision-making process less than 40% of the time—a sobering statistic for life-critical applications.

The computational burden presents another challenge. Generating SHAP explanations for complex medical images can take minutes rather than seconds, creating workflow bottlenecks in time-sensitive clinical environments. Emergency departments and intensive care units operate on second-by-second timescales where delayed explanations become clinically irrelevant.

Perhaps most concerningly, SHAP can exhibit significant bias in medical contexts. When trained on historically biased datasets, SHAP may highlight demographic features like age or gender as important diagnostic factors, potentially perpetuating rather than eliminating healthcare disparities. A recent study found SHAP attributions for sepsis prediction models disproportionately emphasized race-correlated features, raising serious questions about algorithmic fairness.

LIME: The Fast and Intuitive Explanation Engine

LIME (Local Interpretable Model-Agnostic Explanations) takes a radically different approach to AI transparency. Instead of calculating global feature importance, LIME focuses on explaining individual predictions by creating simplified, interpretable models around specific data points. Imagine LIME as a skilled translator, converting complex AI decisions into language clinicians can understand.

The method works by perturbing the input data around the instance being explained, observing how these changes affect the model’s predictions, then fitting a simple linear model to approximate the AI’s behavior in that local region. For medical imaging, LIME might highlight image regions that most influenced a diagnosis, showing radiologists exactly where the AI “looked” to make its decision.

LIME’s strength lies in its intuitive explanations and impressive fidelity scores. Meta-analyses show LIME achieving 81% fidelity (confidence interval: 0.78-0.84) in clinical decision tasks—more than double SHAP’s performance. This high fidelity means LIME explanations accurately reflect the underlying model’s decision-making process four times out of five, making them far more reliable for clinical interpretation.

The speed advantage is equally compelling. LIME generates explanations in seconds rather than minutes, making it practical for real-time clinical workflows. Emergency physicians can receive immediate insights into why an AI system flagged a particular patient for sepsis risk, allowing for rapid clinical correlation and decision-making.

Yet LIME’s apparent superiority comes with hidden dangers that could mislead clinicians. The method’s fundamental limitation lies in its local focus—LIME explains individual predictions without considering global model behavior. This narrow view can create false confidence when explanations appear consistent across similar cases but fail to capture broader algorithmic patterns.

Instability presents another critical flaw. LIME explanations can vary significantly when applied to nearly identical inputs, a phenomenon that becomes problematic when clinicians start relying on explanation patterns for diagnostic reasoning. A recent study found that LIME explanations for pneumonia detection varied by up to 30% when applied to chest X-rays differing by just a few pixels—variability that could lead to conflicting clinical interpretations.

The global coverage problem compounds these issues. While LIME excels at explaining individual cases, it provides no insight into overall model behavior, systematic biases, or edge cases. Clinicians might develop false confidence in AI systems based on locally accurate explanations while remaining blind to global algorithmic failures.

Grad-CAM: Visual Intelligence for Medical Imaging

Grad-CAM (Gradient-weighted Class Activation Mapping) represents the most visually intuitive XAI approach, specifically designed for deep learning models processing images. Unlike SHAP and LIME, which provide numerical or textual explanations, Grad-CAM generates heat maps showing exactly which image regions influenced the model’s decision.

The method leverages gradients flowing through convolutional neural networks to identify important image regions, then creates weighted combinations of feature maps to produce localization maps. When a dermatologist uses AI to analyze a skin lesion, Grad-CAM can overlay a heat map showing precisely which areas the model considered most suspicious, creating an immediate visual dialogue between human expertise and artificial intelligence.

This visual approach proves particularly powerful for medical imaging applications. Radiologists, pathologists, and dermatologists think visually, making Grad-CAM explanations immediately interpretable within existing clinical workflows. The method requires no mathematical background to understand—bright regions indicate high importance, dark regions suggest minimal influence on the diagnosis.

Grad-CAM’s versatility extends beyond traditional medical imaging. Recent innovations have adapted the method for medical text analysis, creating visual representations of which words or phrases influenced AI decisions in clinical notes, research papers, and diagnostic reports. This cross-modal capability makes Grad-CAM valuable across diverse healthcare applications.

However, Grad-CAM’s visual simplicity masks significant technical limitations that can mislead clinical users. The method achieves moderate fidelity scores (54%, confidence interval: 0.51-0.57) in medical applications—better than SHAP but substantially lower than LIME. This means Grad-CAM heat maps accurately represent model decision-making slightly more than half the time.

Resolution limitations present another critical constraint. Grad-CAM’s spatial resolution depends on the underlying convolutional neural network architecture, often producing coarse heat maps that miss fine-grained anatomical details crucial for medical diagnosis. A Grad-CAM explanation might highlight the general lung region for pneumonia detection while missing specific nodular patterns that influenced the AI’s decision.

The method’s dependence on CNN architectures creates additional complications as healthcare AI evolves toward transformer-based models and multimodal systems. Grad-CAM requires significant adaptation for non-CNN architectures, limiting its applicability as AI technology advances beyond traditional convolutional approaches.

The Performance Reality Check: When Explanations Fail

Recent meta-analyses have shattered comfortable assumptions about XAI reliability in medical contexts. While these methods provide valuable insights into AI decision-making, their limitations create new categories of clinical risk that healthcare organizations must address systematically.

The fidelity crisis represents the most immediate concern. When SHAP achieves less than 40% fidelity, clinicians receive accurate explanations for fewer than two out of every five AI decisions. This low reliability means healthcare professionals might develop clinical reasoning based on fundamentally incorrect explanations, potentially leading to diagnostic errors or inappropriate treatment decisions.

Post-hoc explanation instability compounds this problem across all three methods. Small perturbations in input data can produce dramatically different explanations, even when the underlying AI prediction remains consistent. This instability becomes dangerous when clinicians start pattern-matching explanations rather than focusing on the AI’s actual diagnostic accuracy.

The over-confidence trap presents perhaps the most insidious risk. When presented with seemingly reasonable explanations, healthcare professionals often increase their trust in AI systems beyond what the underlying model performance justifies. This phenomenon, known as “explaina-bility bias,” can lead clinicians to defer judgment to AI systems even when their own expertise suggests alternative diagnoses.

However, dismissing XAI methods due to these limitations would be equally dangerous. The solution lies not in abandoning explainable AI but in understanding how to combine methods strategically while maintaining appropriate skepticism about their outputs.

Strategic Implementation: A Multi-Method Framework for Clinical Success

The most successful healthcare AI implementations don’t rely on single XAI methods but instead combine multiple approaches to create robust, clinically useful explanation systems. This multi-method strategy leverages each technique’s strengths while compensating for individual weaknesses.

Consider a comprehensive brain tumor detection system that integrates all three XAI approaches. Grad-CAM provides immediate visual feedback, highlighting suspicious regions that align with radiologists’ visual reasoning patterns. LIME offers rapid, case-specific explanations that help clinicians understand individual diagnostic decisions. SHAP contributes mathematically rigorous feature importance scores that satisfy regulatory requirements and provide audit trails for quality assurance.

This integrated approach proved successful in a recent hepatic segmentation application where surgeons needed interpretable AI support for operative planning. LIME provided rapid explanations during surgical consultations, while SHAP generated detailed feature importance analyses for post-operative quality review. The combination improved surgical confidence while maintaining rigorous documentation standards.

The key insight is contextual deployment—matching XAI methods to specific clinical needs rather than applying one-size-fits-all solutions. Emergency departments might prioritize LIME’s speed for rapid triage decisions, while radiology departments might prefer Grad-CAM’s visual explanations for diagnostic reporting. Regulatory compliance teams might require SHAP’s mathematical rigor for audit purposes.

The Future of Transparent Medical AI: Beyond Current Methods

The next generation of medical XAI is evolving toward a comprehensive “three pillars” framework: transparency (understanding model architecture), interpretability (explaining individual decisions), and explicability (providing domain-relevant reasoning). This holistic approach addresses current XAI limitations while preparing for emerging regulatory requirements.

Uncertainty integration represents a crucial advancement. Future XAI systems will combine explanation methods with confidence intervals, showing not just why an AI made a decision but how certain it is about that reasoning. This uncertainty awareness becomes critical when AI systems encounter edge cases or novel pathologies outside their training distributions.

The development of healthcare-specific XAI benchmarks and standards promises to address current reliability issues. Rather than adapting general-purpose explanation methods to medical contexts, researchers are creating XAI approaches designed specifically for clinical workflows, anatomical reasoning, and medical decision-making patterns.

International regulatory harmonization is driving standardized approaches to medical AI transparency. The FDA’s proposed AI/ML-based Software as Medical Device guidance, combined with EU AI Act requirements, is creating global standards for explainable medical AI that will reshape how these systems are developed, validated, and deployed.

The Bottom Line: Transparency as a Strategic Imperative

The transition from black-box AI to explainable medical systems isn’t just a technical upgrade—it’s a fundamental shift in how we conceptualize the relationship between artificial and human intelligence in healthcare. SHAP, LIME, and Grad-CAM represent the first generation of tools making this transition possible, despite their current limitations.

The evidence is clear: healthcare organizations that proactively implement robust XAI frameworks will gain competitive advantages in regulatory compliance, clinical adoption, and patient trust. Those that continue deploying unexplainable AI systems risk regulatory sanctions, clinical resistance, and potential liability issues as transparency becomes a legal requirement rather than a technical nicety.

Yet success requires sophisticated understanding of each method’s strengths and limitations. Blind faith in XAI outputs can prove as dangerous as ignoring AI explanations entirely. The most effective approach combines multiple explanation methods with clinical expertise, regulatory compliance strategies, and ongoing performance monitoring.

The future of medical AI lies not in choosing between performance and interpretability but in achieving both through thoughtful implementation of explainable AI methods. As these technologies mature and regulatory frameworks solidify, healthcare organizations that master transparent AI deployment will lead the next phase of digital health transformation.

The revolution is already beginning. The question isn’t whether your organization will adopt explainable AI—it’s whether you’ll lead or follow in implementing these essential transparency tools.

Recommended Reading:

- Interpretable Machine Learning by Christoph Molnar – The definitive guide to understanding SHAP, LIME, and other XAI methods

- Explainable AI: Interpreting, Explaining and Visualizing Deep Learning by Ankur Taly and Sandipan Dey

Disclaimer: This educational content was developed with AI assistance by a physician. It is intended for informational purposes only and does not replace professional medical advice. Always consult a qualified healthcare professional for personalized guidance. The information provided is valid as of the date indicated at the end of the article.

Comments