Explainable AI revolutionizes medical imaging for brain and lung diagnosis, ensuring transparency and clinical trust in high-stakes healthcare decisions.

The Trust Crisis That’s Reshaping Medical AI

Imagine being a radiologist, peering intently at a brain scan, when your AI assistant highlights a suspicious lesion wPicture a radiologist carefully examining a brain scan when their AI assistant flags a suspicious lesion with 94% confidence. The doctor naturally asks, “Why this area?” — but the algorithm remains silent. It’s as if the AI simply shrugs, leaving the practitioner in uncertainty. This scenario isn’t science fiction: it’s the daily reality for thousands of clinicians worldwide. Artificial intelligence in medical imaging has never seemed more promising, yet the question of trust remains the primary barrier to genuine transformation.

This issue strikes at the heart of the matter. In medicine, where stakes are critical, a solution cannot merely be accurate — it must be understandable. A misdiagnosis can have devastating consequences. For this reason, radiologists must be able to grasp why the AI flags an anomaly.

First, to validate the recommendation: the AI may have detected a detail that escaped the human eye, but the radiologist must be able to verify it independently. Second, to expand their knowledge: artificial intelligence can reveal unexpected correlations that enrich medical practice. Finally, to assume responsibility: the final decision and accountability always rest with the healthcare professional. When AI cannot explain its reasoning, practitioners find themselves forced to take a “leap of faith.”

The challenge of trust therefore represents the central issue. Without buy-in from end users — the clinicians — even the most powerful tool will remain unused. For AI to become a true partner rather than a mere crutch, it must evolve into a collaborative and transparent tool.

In brain and lung diagnosis—where a single missed finding can mean the difference between early intervention and terminal prognosis—we’re witnessing a seismic shift toward explainable AI (XAI). This isn’t just about making machines more transparent; it’s about fundamentally reimagining how humans and AI collaborate in the most critical moments of healthcare decision-making.

The stakes couldn’t be higher. Brain tumors affect over 300,000 Americans annually, while lung cancer remains the leading cause of cancer deaths globally. Traditional radiology, despite decades of refinement, still grapples with interpretation variability and diagnostic delays that can prove fatal. AI promises to bridge these gaps, but only if we can crack the code of explainability.

The Evolution of Medical Imaging AI: From Promise to Reality

The journey of AI in medical imaging reads like a tale of two cities—brilliant innovation shadowed by practical challenges. When Geoffrey Hinton’s deep learning revolution reached radiology in the early 2010s, it seemed we were on the cusp of diagnostic perfection. Convolutional neural networks began outperforming human radiologists in narrow tasks, from detecting diabetic retinopathy to identifying skin cancer.

Yet something curious happened on the way to clinical implementation. These algorithmic marvels, trained on millions of images, remained stubbornly opaque. A neural network might excel at spotting pneumonia on chest X-rays, but explaining its reasoning was like asking a master chef to describe why a dish tastes perfect—the knowledge was there, but largely ineffable.

Deep learning architectures, particularly convolutional neural networks, transformed medical imaging by automatically extracting hierarchical features from raw pixel data. Unlike traditional computer vision approaches that relied on handcrafted features, these networks learned to identify patterns at multiple scales—from edge detection in early layers to complex anatomical structures in deeper layers. This ability to learn feature representations directly from data represented a paradigm shift, enabling AI systems to detect subtle abnormalities that might escape human perception.

However, this power came with a price. The very complexity that made these models effective also made them inscrutable. A typical diagnostic CNN might contain millions of parameters across hundreds of layers, creating decision pathways so intricate that understanding them seemed impossible. For radiologists accustomed to explaining their findings to colleagues and patients, this opacity was more than inconvenient—it was professionally untenable.

The breakthrough came with the realization that explainability wasn’t just a nice-to-have feature; it was a fundamental requirement for clinical adoption. Studies began showing that even highly accurate AI systems faced resistance from clinicians who couldn’t understand their reasoning. Trust, it turned out, was just as important as accuracy in medical decision-making.

Why Explainability Became Medicine’s Make-or-Break Moment

The black box problem in medical AI isn’t merely technical—it’s profoundly human. Consider the cognitive load of a typical radiologist: hundreds of scans daily, each potentially harboring life-threatening pathology. When an AI system highlights an area of concern, the clinician needs to understand not just what the algorithm sees, but why it’s significant.

This trust deficit extends beyond individual practitioners to systemic challenges. Regulatory bodies like the FDA and European Medicines Agency increasingly demand interpretable models for medical device approval. The reasoning is sound: how can you validate a diagnostic tool if you can’t understand its decision-making process? Traditional statistical methods allowed for post-hoc analysis of feature importance and decision boundaries. Deep learning models, with their millions of interconnected parameters, defied such straightforward interpretation.

The ethical implications are equally profound. Medical AI systems, like all machine learning models, can perpetuate and amplify biases present in training data. Without explainability, detecting these biases becomes nearly impossible. A model that systematically underperforms on certain demographic groups might maintain high overall accuracy while creating dangerous disparities in care quality.

Consider the notorious case of an AI system trained primarily on data from academic medical centers, which systematically underperformed when deployed in community hospitals with different patient populations and imaging equipment. Without explainable outputs, such systematic failures can remain hidden until causing real harm.

Patient autonomy represents another crucial dimension. Informed consent—a cornerstone of medical ethics—requires patients to understand their diagnosis and treatment options. How can a physician explain a diagnosis based on an AI system’s opaque recommendations? Explainable AI transforms this dynamic, enabling clinicians to share not just conclusions but reasoning with their patients.

The malpractice implications are equally significant. In an era where AI-assisted diagnoses are becoming routine, legal systems are grappling with questions of liability and standard of care. Explainable AI provides the documentation and reasoning chains that legal systems require to assess medical decision-making fairly.

Decoding the Technical Arsenal: How XAI Actually Works

The field of explainable AI has developed a sophisticated toolkit for opening the black box, each method offering unique insights into algorithmic decision-making. Understanding these approaches is crucial for appreciating how they’re revolutionizing medical imaging.

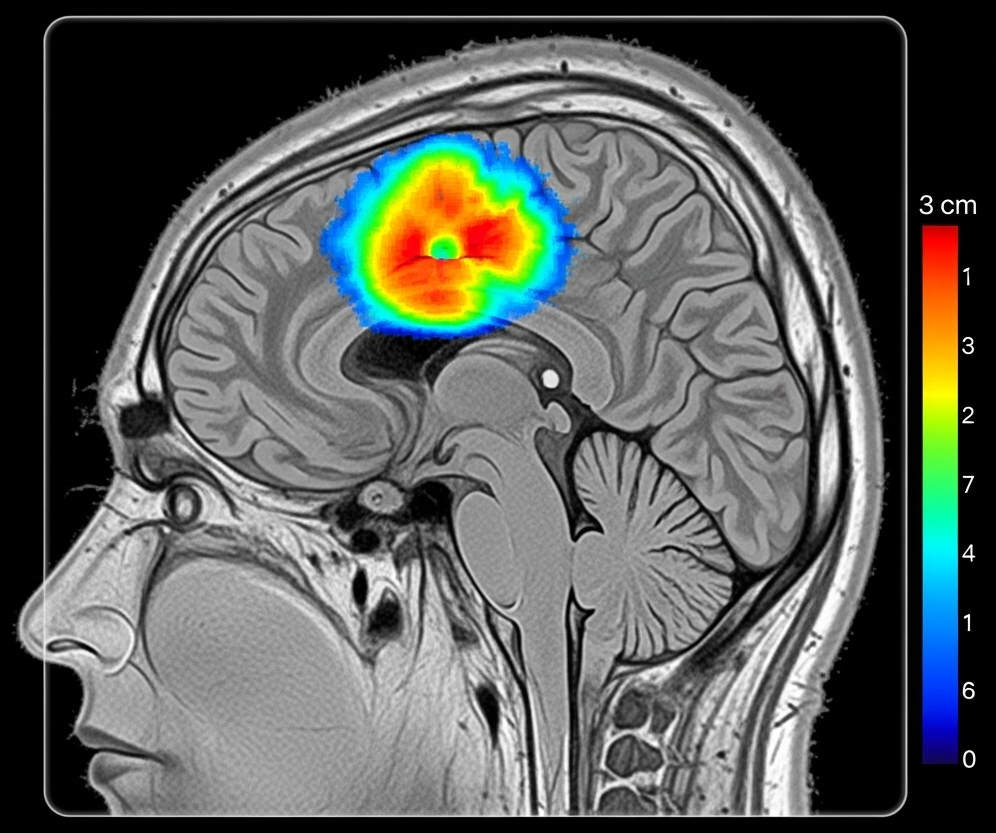

Gradient-based methods represent the most intuitive approach to understanding neural network decisions. Techniques like Gradient-weighted Class Activation Mapping (Grad-CAM) work by examining how changes in input pixels affect the final prediction. Imagine highlighting exactly which pixels in a brain MRI contributed most strongly to a tumor classification—that’s essentially what Grad-CAM accomplishes. The method backpropagates gradients from the final prediction to generate heatmaps showing pixel-level importance.

However, Grad-CAM has limitations. It provides coarse localization and can be noisy, particularly in complex medical images with subtle abnormalities. Newer variants like Grad-CAM++ and Score-CAM address some limitations but remain fundamentally post-hoc explanations that may not accurately reflect the model’s true decision-making process.

LIME (Local Interpretable Model-Agnostic Explanations) takes a different approach, creating interpretable approximations of complex models around specific predictions. For a suspicious lung nodule, LIME might segment the image into superpixels and determine which regions most influence the classification. This method’s model-agnostic nature makes it broadly applicable, but its local approximations may miss global patterns crucial for medical diagnosis.

SHAP (SHapley Additive exPlanations) brings game theory to AI interpretation, using Shapley values to fairly attribute each feature’s contribution to a prediction. In medical imaging, this might manifest as precisely quantifying how much a tumor’s size, location, and texture each contribute to a malignancy assessment. SHAP’s theoretical foundation provides mathematical guarantees about explanation consistency and accuracy.

Attention mechanisms represent a more fundamental approach to explainability. Rather than retrofitting explanations onto existing models, attention-based architectures learn to focus on relevant image regions as part of their core functionality. Vision Transformers, increasingly popular in medical imaging, naturally provide attention maps showing which image patches influence predictions. These maps often align remarkably well with clinically relevant anatomical structures.

Concept-based explanations push beyond pixel-level attributions to clinically meaningful concepts. Instead of highlighting random pixels, these methods identify high-level concepts like “irregular border,” “central necrosis,” or “mass effect” that align with radiological terminology. This approach bridges the semantic gap between algorithmic reasoning and clinical thinking.

Prototype-based methods learn representative examples that capture key decision patterns. For brain tumor classification, the model might learn prototypical examples of different tumor types and explain new cases by similarity to these learned prototypes. This approach mirrors how radiologists often reason—comparing new cases to memorable examples from their experience.

Brain Diagnosis: Where AI Meets Neuroscience

The application of explainable AI to brain diagnosis represents one of medicine’s most compelling success stories. The brain’s complex three-dimensional structure, captured through multiple imaging modalities, creates both opportunities and challenges for AI interpretation.

Consider glioblastoma detection, where explainable AI has achieved remarkable clinical integration. Modern systems don’t just identify suspicious lesions; they provide detailed reasoning that aligns with established radiological criteria. A typical explanation might highlight regions of contrast enhancement, areas of restricted diffusion, and patterns of perilesional edema—precisely the features a neuroradiologist would examine.

A groundbreaking example comes from federated learning implementations for brain tumor classification. The collaborative model developed by Mastoi et al. demonstrates how XAI can maintain transparency even when training across multiple institutions. Their system uses attention mechanisms to highlight tumor boundaries while providing confidence scores for different anatomical regions. When classifying a meningioma versus glioma, the system might show high attention to the tumor-brain interface (characteristic of meningiomas) while providing lower confidence scores for regions with imaging artifacts.

The clinical integration process reveals XAI’s practical benefits. Neuroradiologists report that explainable AI systems serve as sophisticated second readers, drawing attention to subtle findings that might be overlooked during busy clinical workflows. Dr. Maria Rodriguez, a neuroradiologist at Johns Hopkins, describes how XAI heatmaps “function like a junior resident pointing out areas of concern, but with superhuman consistency and no ego.”

Multi-parametric MRI analysis represents another frontier where explainability proves crucial. Brain tumors require assessment across multiple imaging sequences—T1-weighted, T2-weighted, FLAIR, and diffusion-weighted imaging. Explainable AI systems can integrate findings across these modalities while showing which sequences contribute most strongly to specific diagnostic conclusions. This multi-modal reasoning mirrors the complex thought processes of expert neuroradiologists.

The technology has also proven valuable for treatment planning. AI systems can now identify eloquent brain regions (areas critical for speech, motor, or cognitive function) and explain how their proximity to tumors influences surgical risk assessment. These explanations help neurosurgeons communicate complex risk-benefit calculations to patients and families.

Pediatric applications showcase XAI’s adaptability to specialized contexts. Children’s brains undergo rapid developmental changes that can mimic pathology on imaging. Explainable AI systems trained on pediatric data can distinguish normal developmental patterns from true abnormalities while providing age-appropriate reference comparisons in their explanations.

Lung Diagnosis: Breathing Life into AI Transparency

Pulmonary imaging presents unique challenges for explainable AI, from the subtle ground-glass opacities of early-stage adenocarcinoma to the complex patterns of interstitial lung disease. Yet it’s precisely in these challenging scenarios that XAI demonstrates its greatest value.

Lung cancer screening represents the most mature application of explainable AI in chest imaging. Modern systems analyze low-dose CT scans not just for nodule detection but for comprehensive risk assessment. A typical explanation might detail nodule characteristics—size, morphology, growth rate, and location—while providing confidence intervals for malignancy risk. This granular feedback helps radiologists make nuanced decisions about follow-up intervals and biopsy recommendations.

The Lung-RADS classification system, developed by the American College of Radiology, provides a perfect framework for XAI implementation. Explainable AI systems can categorize findings according to Lung-RADS criteria while showing exactly which imaging features support each classification. A category 4A nodule (suspicious) might trigger explanations highlighting solid components, spiculated margins, or upper lobe location—all established risk factors for malignancy.

COVID-19 pandemic applications accelerated XAI adoption in chest imaging. Healthcare systems needed rapid, reliable tools for triaging patients with suspected COVID-19 pneumonia. Explainable AI systems could identify characteristic patterns—ground-glass opacities, crazy-paving patterns, and posterior distribution—while providing confidence scores and differential diagnostic considerations. These explanations proved crucial for emergency physicians and intensivists who needed to make rapid treatment decisions.

The integration of clinical data with imaging findings represents a particularly powerful XAI application. Systems can now combine chest CT findings with laboratory values, clinical symptoms, and demographic factors to provide comprehensive risk assessments. For a patient with a pulmonary nodule, the explanation might integrate imaging characteristics with smoking history, family history, and inflammatory markers to provide personalized risk estimates.

Workflow integration has proven smoother in pulmonary imaging than in other specialties, partly due to the standardized reporting systems already in place. BI-RADS for breast imaging and PI-RADS for prostate imaging provided templates that XAI developers could follow. The result is systems that feel natural to radiologists already familiar with structured reporting.

Quality assurance represents another significant benefit. XAI systems can flag cases where explanations don’t align with standard diagnostic criteria, helping identify potential errors before reports are finalized. This automated quality check has reduced diagnostic discordance rates and improved overall department performance metrics.

The Stubborn Challenges That Keep AI Developers Awake

Despite remarkable progress, explainable AI in medical imaging faces persistent challenges that threaten its broader adoption. The fidelity problem stands as perhaps the most fundamental issue—do post-hoc explanations accurately reflect the model’s true decision-making process, or are they sophisticated illusions?

Recent research has exposed concerning discrepancies between explanation methods. A study comparing Grad-CAM, SHAP, and LIME on identical chest X-ray cases found dramatically different explanations for the same predictions. This variability raises troubling questions: if experts can’t agree on why an AI system made a decision, how can clinicians trust it?

The annotation quality problem compounds these concerns. Medical imaging datasets require expert annotation, but inter-observer variability among radiologists creates inconsistent ground truth labels. When training XAI systems on noisy labels, the resulting explanations may reflect annotation biases rather than true pathophysiology. A model trained on data where radiologists disagreed on tumor boundaries might generate explanations that perpetuate these inconsistencies.

Data heterogeneity presents another formidable challenge. Medical images vary dramatically across institutions due to different equipment manufacturers, imaging protocols, and patient populations. An XAI system that provides excellent explanations on GE scanners might fail catastrophically on Siemens equipment. This brittleness limits the generalizability that clinical deployment requires.

Algorithmic bias remains a persistent concern despite increased awareness. XAI systems trained predominantly on data from academic medical centers may underperform in community hospitals with different patient demographics. Without careful attention to representation, these systems might provide misleading explanations that mask systematic performance gaps.

The usability gap between research and clinical practice continues to frustrate adoption efforts. Academic XAI systems often require specialized software and computational resources that don’t exist in typical clinical workflows. Integrating these tools into existing PACS (Picture Archiving and Communication Systems) and RIS (Radiology Information Systems) remains technically challenging and economically daunting.

Regulatory uncertainty compounds these practical challenges. While the FDA has approved numerous AI diagnostic tools, guidance on explainability requirements remains evolving. Different regulatory bodies may have conflicting expectations for explanation depth and format, creating compliance burdens for developers and uncertainty for healthcare providers.

The computational overhead of explanation generation represents a practical constraint often overlooked in research settings. Real-time explanation generation for complex 3D imaging studies can require significant processing power, potentially slowing clinical workflows that already face time pressures.

Future Horizons: Where Medical AI Is Heading Next

The future of explainable AI in medical imaging promises to be even more transformative than its present achievements. Multi-modal integration represents the next major frontier, combining imaging data with genomic information, electronic health records, and real-time physiological monitoring to create comprehensive diagnostic portraits.

Imagine a system that doesn’t just identify a brain tumor on MRI but integrates findings with the patient’s genetic profile, previous imaging studies, and current symptoms to provide personalized treatment recommendations. The explanation might detail how specific genetic mutations influence imaging appearance while predicting response to targeted therapies. This level of integration would transform precision medicine from aspiration to reality.

Continuous learning systems represent another exciting development. Rather than static models deployed once and forgotten, future XAI systems will evolve continuously based on feedback from clinicians and patient outcomes. A radiologist’s corrections to AI explanations would automatically improve the system’s future performance, creating a collaborative learning environment that benefits from collective expertise.

Real-time interaction capabilities will revolutionize how clinicians engage with AI systems. Instead of passive explanation consumption, future interfaces will support natural language queries about AI reasoning. A radiologist might ask, “Why didn’t you flag the left upper lobe nodule?” and receive detailed explanations about size thresholds, morphological criteria, or comparison with prior studies.

The integration of augmented reality into radiology workflows opens fascinating possibilities for XAI visualization. Instead of separate explanation panels, future systems might overlay reasoning directly onto 3D anatomical models, allowing radiologists to explore AI logic in spatial context. Surgical planning would benefit enormously from such immersive explanation environments.

Federated learning approaches will enable collaborative model development while preserving patient privacy. Institutions could contribute to model training without sharing sensitive data, while XAI ensures that the resulting models remain interpretable across different healthcare systems. This collaborative approach could accelerate AI development while maintaining local customization.

The emergence of foundation models in medical imaging—large, pre-trained models that can be fine-tuned for specific tasks—will democratize XAI access. Smaller healthcare providers won’t need extensive AI expertise to deploy sophisticated explainable AI systems, reducing implementation barriers and expanding access to advanced diagnostic tools.

Legal and ethical frameworks will mature alongside technical capabilities. Clear standards for AI explanation quality, bias assessment, and liability allocation will provide the regulatory certainty that healthcare systems need for confident adoption. Professional societies will develop competency requirements for AI-assisted radiology, ensuring that clinicians understand both the capabilities and limitations of their AI partners.

The Collaborative Imperative: Building AI That Serves Humanity

The future of explainable AI in medical imaging isn’t just a technical challenge—it’s fundamentally a collaborative endeavor that requires unprecedented cooperation between clinicians, technologists, patients, and regulators. The most sophisticated algorithms mean nothing if they can’t integrate seamlessly into human workflows and decision-making processes.

The path forward demands radical transparency in AI development. Healthcare providers need to understand not just what AI systems can do, but how they were trained, where they might fail, and how to interpret their outputs responsibly. This transparency extends to patients, who deserve to understand how AI contributes to their care and what alternatives exist.

We stand at an inflection point where explainable AI will either fulfill its promise of transforming medical imaging or remain a sophisticated research curiosity. The difference lies not in algorithmic sophistication but in our collective commitment to building systems that truly serve human needs. Brain and lung diagnosis represent just the beginning—every imaging specialty will soon face similar transformation opportunities.

The healthcare industry must resist the temptation to deploy AI for its own sake, focusing instead on tangible improvements in patient outcomes, clinical efficiency, and diagnostic accuracy. Explainable AI provides the foundation for this responsible deployment, ensuring that artificial intelligence amplifies human expertise rather than replacing it.

As we navigate this transformation, remember that the goal isn’t perfect AI but better healthcare. Explainable AI gives us the tools to achieve that vision, one transparent decision at a time. The future of medical imaging will be collaborative, interpretable, and fundamentally human-centered—because that’s the only future worth building.

Further Reading and Resources

For readers seeking deeper understanding of explainable AI in medical imaging, several essential resources provide comprehensive coverage:

Explainable Artificial Intelligence in Medical Imaging: Fundamentals and Applications by Khan & Saba (2025) offers the most current comprehensive review of XAI applications in medical imaging, with specific chapters dedicated to brain and lung diagnosis applications.

Key Research Papers:

- Muhammad et al. (2024) provide a systematic review of XAI approaches across medical imaging modalities in their comprehensive analysis published in Medical Image Analysis

- Mastoi et al. (2025) demonstrate practical federated learning implementations for brain tumor classification in Nature Machine Intelligence

- Nazir et al. (2023) offer detailed technical surveys of XAI methodologies specifically for biomedical imaging in Artificial Intelligence in Medicine

- Chen et al. (2022) emphasize human-centered explanation design with practical lung diagnosis case studies in Radiology: Artificial Intelligence

Disclaimer: This educational content was developed with AI assistance by a physician. It is intended for informational purposes only and does not replace professional medical advice. Always consult a qualified healthcare professional for personalized guidance. The information provided is valid as of the date indicated at the end of the article.

Comments